There has recently been increasing interest in devices called FPGAs as an alternative to microprocessor electronics.

In this post, we will see what an FPGA is, what the reasons for its rise and popularity are in the geek/maker world. In the future, we will delve into Open Source FPGAs hand in hand with the Alhambra FPGA.

What is an FPGA?

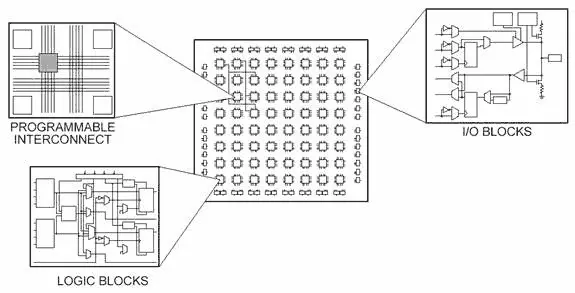

It is an electronic device consisting of functional blocks joined through a programmable connection array.

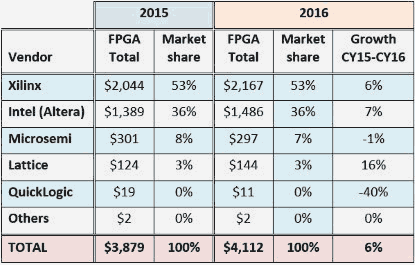

FPGAs were invented in 1984 by Ross Freeman and Bernard Vonderschmitt, co-founders of Xilinx. Some of the main manufacturers are Xilinx, Altera (bought by Intel in 2015), MicroSem, Lattice Semiconductor, or Atmel, among others.

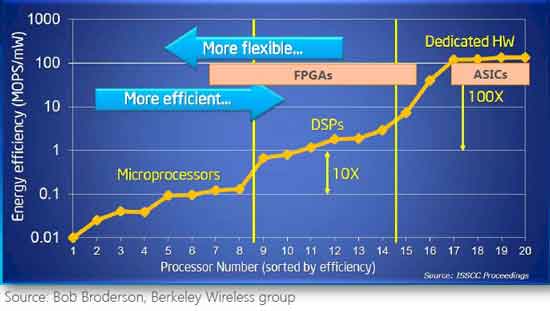

FPGAs are slower than an ASIC (Application-Specific Integrated Circuit), specific processors developed for a particular task. However, the great flexibility of being able to change their configuration makes their cost lower for both small manufacturing batches and prototyping, due to the enormous expense required to develop and manufacture an ASIC.

FPGAs are used in the industry dedicated to the development of digital integrated circuits and research centers to create digital circuits for the production of prototypes and small series of ASICs.

How does it differ from a processor?

Although at first glance it seems that a processor and an FPGA are similar devices because both are capable of performing certain tasks, the truth is that when delving into the topic, it is almost easier to find differences than similarities.

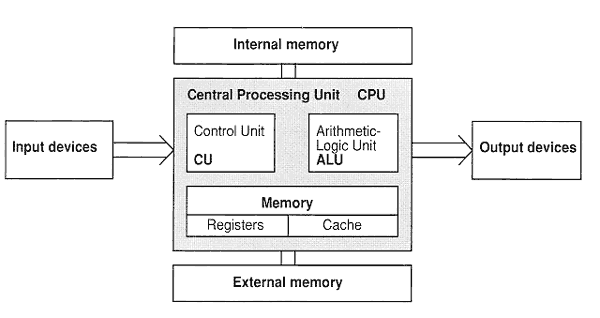

To enter the topic, let’s remember in a very summarized way the way a processor works. A processor contains a series of instructions (functions) that perform operations on binary operators (add, increment, read and write from memory). Some processors have more instructions than others (associated with the internal circuitry of the processor) and it is one of the factors that determine its performance.

On the other hand, it contains a series of registers, which contain the input and output data in the processor’s operations. In addition, we have memory to store information.

Finally, a processor contains a stack of instructions, which contains the program to be executed in machine code, and a clock.

In each clock cycle, the processor reads the necessary values from the instruction stack, calls the appropriate instruction, and executes the calculation.

When we program the processor, we use one of the many available languages, in a format understandable and comfortable for users. In the linking and compilation process, the code is translated into machine code, which is stored in the processor’s memory. From there, the processor executes the instructions, and therefore our program.

However, when programming an FPGA, what we are doing is modifying an array of connections. The individual blocks are made up of elements that allow them to adopt different transfer functions.

Together, the different blocks, connected by the connections we program, physically constitute an electronic circuit, similar to how we would do on a training board or when making our own chip.

As we can see, the substantial difference. A processor (in its many variants) has a fixed structure and we modify its behavior through the program we make, translated into machine code, and executed sequentially.

However, in an FPGA, we vary the internal structure, synthesizing one or several electronic circuits inside. When we “program” the FPGA, we define the electronic circuits that we want to configure inside it.

How is an FPGA programmed?

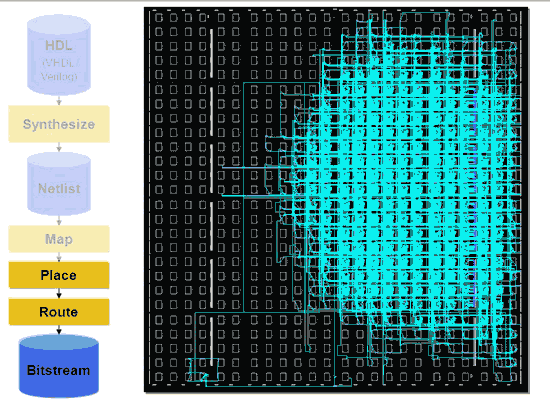

FPGAs are not “programmed” in the sense we are used to, with a language like C, C++, or Python. In fact, FPGAs use a different type of language called descriptive language, instead of a programming language.

These descriptive languages are called HDL or Hardware Description Language. Examples of HDL languages are Verilog, HDL, or ABEL. Verilog is Open Source, so it will be one of the most frequently heard ones.

Descriptive languages are not something exclusive to FPGAs. On the contrary, they are an extremely useful tool in chip and SoC design.

Subsequently, the integrator (in a broad sense, the equivalent of the “compiler” in programming languages) translates the description we have made of the device into a synthesizable (achievable) device with the FPGA blocks, and determines the connections to be made.

The connections to the FPGA are translated into a specific FPGA communication frame (bitstream), which is transmitted to the FPGA during programming. The FPGA interprets the bitstream and configures the connections. From that moment on, the FPGA is configured with the circuit we have defined/described.

HDL languages have a difficult learning curve. The greatest difficulty is that they have a very low level of abstraction, as they describe electronic circuits. This causes projects to grow enormously as the code increases.

Manufacturers provide commercial tools to program their own FPGAs. Currently, they configure complete environments with a large number of tools and functionalities. Unfortunately, most of them are not free, or they are only free for some FPGA models from the manufacturer. Unfortunately, they are not free and are tied to the architecture of a single manufacturer.

With the development of FPGAs, other languages have appeared that allow a higher level of abstraction, similar to C, Java, Matlab. Examples are System-C, Handel-C, Impulse-C, Forge, among others.

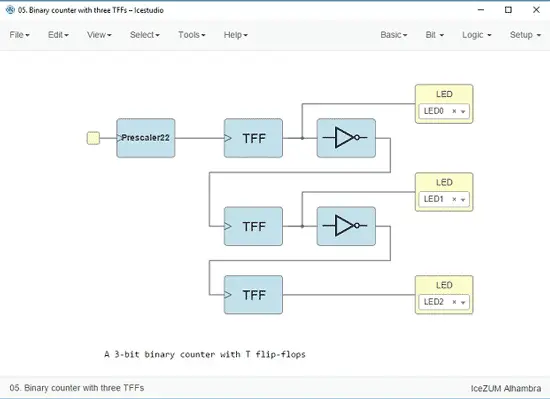

With the evolution in the development of FPGAs, tools focused on the graphical programming of FPGAs have also appeared, such as LabVIEW FPGA, or the Open Source project IceStudio developed by Jesús Arroyo Torrens.

Finally, some initiatives have tried to make the conversion from a programming language to HDL (usually Verilog), which can then be loaded into the FPGA with the tools of the same. Examples are the Panda project, the Cythi project, or MyPython, among others.

Why do we need to simulate an FPGA?

When we program a processor, if we make a mistake, there are usually no serious problems. Usually, we will even have an environment where we can debug and trace the program, define breakpoints, and see the flow of the program.

However, when programming an FPGA, we are physically configuring a system and, in case of an error, we could cause a short circuit and damage part or all of the FPGA.

For that reason, and as a general rule, we will always simulate the design to be tested before loading it into the real FPGA.

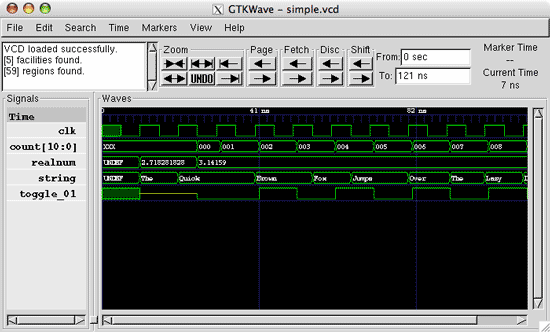

Descriptive languages are used for simulation, in combination with software that allows simulating and graphing the FPGA’s response. An example of this is GTKWave.

Commercial suites usually integrate the simulation tool within the programming environment itself.

How much does an FPGA cost?

Logically, there is a wide range of prices, but in general, they are not cheap devices. Speaking of the domestic sector (the ones we are going to buy), they are in the range of approximately 20 to 80€ euros.

To put it in context, it is much more expensive than an Arduino Nano (16Mhz) or an STM32 (160Mhz) that we can buy for 1.5€, a Node Mcu ESP8266 (160Mhz + WiFi) that we can buy for 3.5€. They are even more expensive than an Orange Pi (Quad 800 Mhz + WiFi), which can be found for around 20€.

How powerful is an FPGA?

It is difficult to define the computing power of an FPGA, as it is completely different from a processor like the one we can find in an Arduino, an STM32, an ESP8266, or even a computer like the Raspberry PI.

FPGAs excel in performing tasks in parallel, and for extremely fine control of timing and task synchronization.

In reality, it is best to think in terms of an integrated circuit. Once programmed, the FPGA physically constitutes a circuit. In general, as we have mentioned, an FPGA is slower than the equivalent ASIC.

The power of an FPGA is given by the number of available blocks and the speed of its electronics. Additionally, other factors such as the constitution of each of the blocks, and other elements such as RAM or PLLs, come into play.

To continue with the comparison, the speed of a processor is determined by its operating speed. It is also necessary to take into account that a processor frequently requires between 2 to 4 instructions to perform an operation.

On the other hand, although FPGAs normally incorporate a clock for synchronous task processing, in some tasks the speed is independent of the clock, and is determined by the speed of the electronic components that make it up.

As an example, in the well-known Lattice ICE40 FPGA, a simple task like a counter can be executed at a frequency of 220Mhz (according to the datasheet). In a single FPGA, we can make hundreds of these blocks.

What is better Arduino, FPGA, or Raspberry?

Well… What is better, a spoon, a knife, or a fork? They are different tools, which excel in different things. Certain tasks can be performed with both, but in some it is much more suitable and efficient to use one of them.

Fortunately, the scientific and technical field is not like a soccer match or politics… we don’t have to choose a side. In fact, we can use them all, even simultaneously. Thus, there are devices that combine a processor with an FPGA to provide us with the best of both worlds.

In any case, FPGAs are a very powerful tool and different enough from the rest to be interesting in themselves.

Can I make a processor with an FPGA?

Of course, you can. An FPGA can adopt any electronic logic circuit, and processors are electronic circuits. The only limitation is that the FPGA has to be large enough to accommodate the processor electronics (and processors are not exactly small).

There are projects for small processors that can be configured on an FPGA. Examples are Xlinx’s MicroBlaze and PicoBlaze, Altera’s Nios and Nios II, and the open-source processors LatticeMicro32 and LatticeMicro8.

There are even projects to emulate historical processors in FPGAs, such as the processor of the Apollo 11 Guidance Computer.

Emulating a processor in an FPGA is an interesting exercise both due to its complexity and for learning. It is also interesting if we want to test our own processor or our ideas.

However, in most cases, it is simpler and more economical to combine the FPGA with an existing processor. There are very good processors (AVR, ESP8266, STM32).

Why are FPGAs on the rise?

First, because over time, technologies become cheaper. Not many years ago, an automaton with a capacity similar to an Arduino could cost hundreds or even thousands of euros, and now we can find it for a few euros.

Similarly, FPGAs have been gaining popularity in the industry. As production increases, they have appeared with greater capabilities and functionalities. There are even ranges intended for embedded devices or mobile applications. All this leads to the reduction in the price of certain FPGA models.

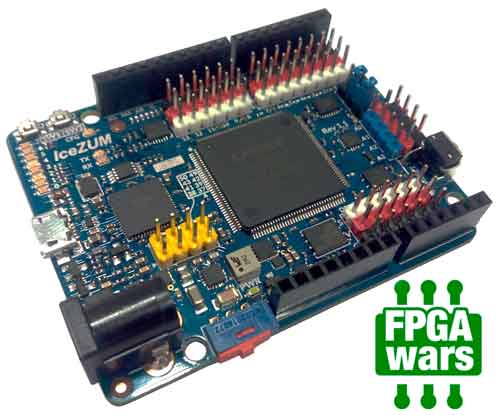

On the other hand, the main reason for the rise in the popularity of FPGAs in the domestic/maker field is the reverse engineering work carried out by Clifford Wolf on the Lattice iCE40 LP/HX 1K/4K/8K FPGA, which gave rise to the IceStorm project.

The IceStorm project is a toolkit (consisting of IceStorm Tools + Archne-pnr + Yosys) that allows the creation of the necessary bitstream to program an iCE40 FPGA with open-source tools.

Clifford’s work was carried out on an IceStick, a development board with an iCE40 FPGA, due to its low cost and small technical characteristics, which allowed the reverse engineering work.

It was the first time that an FPGA could be programmed with Open Source tools. This allowed the generation of a growing community of collaborators that have borne fruits such as IceStudio or Apio.

It should be noted that the rest of the FPGAs require investments of hundreds of euros to buy the FPGA and up to thousands of euros in software.

Let’s say that, with some distance, the IceStorm project and the Lattice ICE was the beginning of a revolution in the field of FPGAs similar to what Arduino started with Atmel’s AVR processors, and that has made it possible to put this technology within reach of home users.

Do FPGAs have a future, or are they just a passing trend?

Well, even without having a crystal ball, it is most likely that FPGAs will be devices that will be useful, at least in the medium and short term. As we said, they are often used to facilitate the design and prototyping of ASICs. They are also expanding their scope of application, from heavy computation applications (vision systems, AI, autonomous driving) to lightweight versions for mobile devices.

As an example of their viability, consider that Intel has invested 16.7 billion dollars in the purchase of Altera. Market estimates point to an estimate of 9,000-10,000 million dollars for 2020, compared to 6,000-7,000 million dollars in 2014, and an annual growth of 6-7% (far above the average growth of 1-2% for the semiconductor sector).

Speaking of the future (years) in which FPGAs become cheaper and more popular, we can even imagine hybrid FPGA and processor systems (or even totally FPGA) where software can reconfigure the hardware, creating or undoing processors, or memory, according to the needs.

The real question is, do Open Source FPGAs have a future and in the “domestic” field, or are they just a passing trend?

The short answer is, we hope so. The long answer is that, as of today, we only have an FPGA (the iCe40) available compatible with Open Source tools, and in reality, it is a fairly small and not very powerful FPGA.

If the technique continues to advance and the community is not strong enough to generate an ecosystem that pushes FPGAs towards Open Source, there is a certain risk that it will be a passing bubble.

The best way is to promote the extension of these types of devices and to generate a strong community that promotes the popularization of this technology. And if possible, by creating and improving the Open Source tools available for FPGAs.